Xuan Shen Yizhou Wang Xiangxi Shi Yanzhi Wang Pu Zhao Jiuxiang Gu

arXiv preprint (cs.CL), 2025

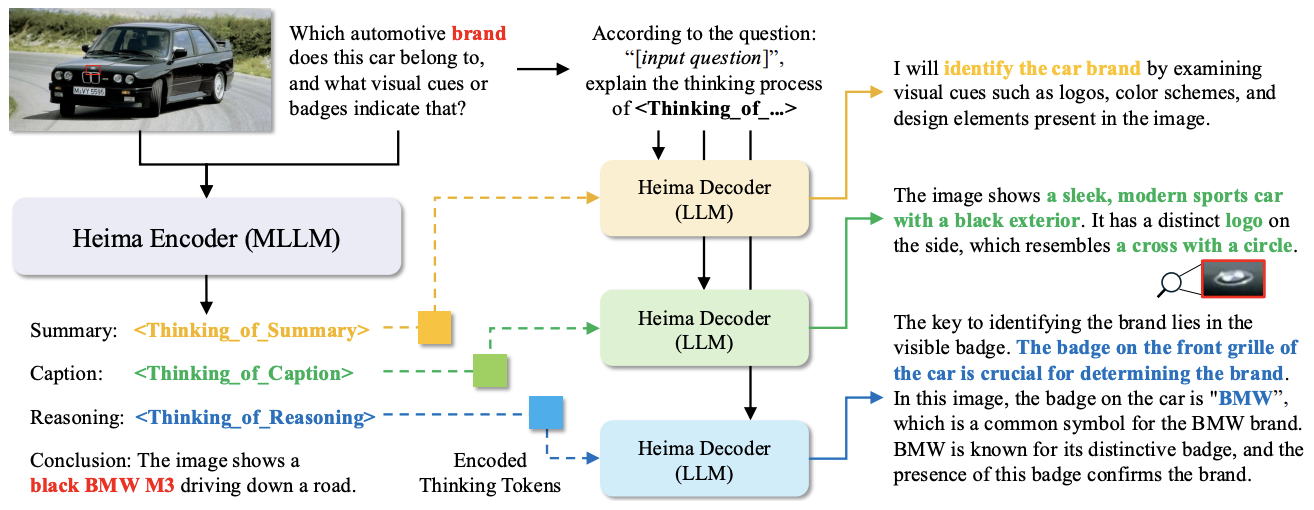

Chain-of-Thought (CoT) reasoning can improve complex problem-solving in Multimodal Large Language Models (MLLMs), but verbose textual reasoning is inefficient. This paper proposes Heima, an efficient reasoning framework that performs "hidden thinking" by condensing each intermediate CoT into a compact hidden representation using a single thinking token. A corresponding Heima Decoder (LLM) can reconstruct variable-length textual reasoning from the hidden representations.

- Heima Encoder: encodes each step of CoT into a single thinking token to reduce generated tokens.

- Heima Decoder: reconstructs human-readable reasoning from hidden thinking tokens for interpretability.

- Efficiency: achieves substantially fewer generated tokens while maintaining or improving zero-shot accuracy across MLLM reasoning benchmarks.

Paper (arXiv PDF) | arXiv | Code | DOI

BibTeX

@misc{shen2025efficient,

title = {Efficient Reasoning with Hidden Thinking},

author = {Xuan Shen and Yizhou Wang and Xiangxi Shi and Yanzhi Wang and Pu Zhao and Jiuxiang Gu},

year = {2025},

eprint = {2501.19201},

archivePrefix = {arXiv},

primaryClass = {cs.CL},

url = {https://arxiv.org/abs/2501.19201}

}